QuadTiles

QuadTiles are a geo-data storage/indexing strategy - it's more commonly referred to as hierarchical binning. The idea is to store a geo-database such that data for a specific location can be retrieved quickly, by dividing the data up by location, partitioning the world into tiles.

Note that we use the word tiles a lot in OpenStreetMap, and in fact we're normally talking about tiled map images, that's not really what this page is about although a QuadTile data indexing scheme has some similarities with Slippy map tilenames.

Note: the QuadTiles indexing strategy is analog to Geohash algorithm, as commented here.

Splitting the world into tiles

If we split the world into 4 tiles, (level 1 of zoom) then we would need 2 bits to give a tile address (topleft, topright, bottomleft, bottomright).

Each of those tiles would be about 20,000km in size. This is obviously too big to be practical, so we would need to split it into smaller tiles. Adding 2 more bits, we have 10,000km tiles (zoom level 2) - better, but still way too big. But of course, we can do this as much as we like. By the time we get to 32 bits (zoom level 16), our tiles are about 600m in size.

Tile size tradeoffs

The smaller tiles that you have, the more bits are required to address them. Also the smaller the tile, the more segment traversals are likely, and the more space will be used. But too big, and each tile will contain more information than the user requires, and could be a vast amount of data (e.g. city centres!).

Quadtile implementation

A quadtile is a recursive addressing scheme for 2 dimensional space, that is particularly useful in uneven datasets. Instead of partitioning our 32-bit address bitspace into, say, a tile address of

xxxxxxxx xxxxxxxx yyyyyyyy yyyyyyyy

Quadtiles use 2-bit tile interleaved addresses thus:

xyxyxyxy xyxyxyxy xyxyxyxy xyxyxyxy

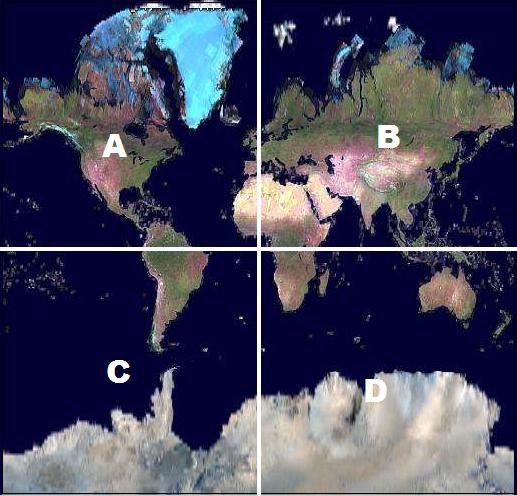

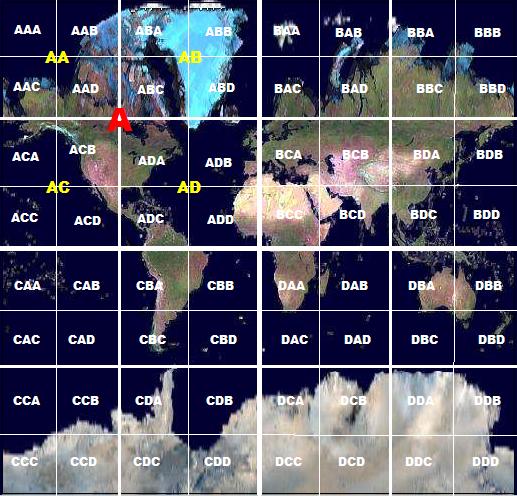

For example, if we use a shorthand for each XY pair of 00=A 01=C 10=B 11=D; observe the globe split into tiles:

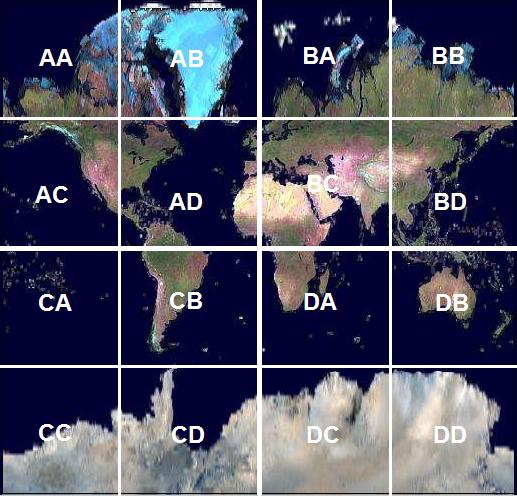

For the next level down, we repeat (using 4 bits)

And again:

This has a very important property: Tile ADA is a component of Tile AD that is a component of tile A.

We have thus substituted 2-d ranged coordinates (x,y) for a single address. As we read the address from left to right, we are steadily becoming more specific about the location.

Indexing single-point geo-locations

In September 2007 User:TomH was awarded a Lolcat of awesomeness "for greatly speeding up GPS point downloads by cunning use of QuadTiles". He has also changed the indexing of the main database to speed up core Protocol requests, by indexing node locations by quadtile. This particularly improved the efficiency of API operations which fetch a bounding-box of data. The result was a dramatic improvement in overall server performance.

FIXME: Need more technical details on what is actually implemented (as opposed to the ideas on the rest of this page)

Indexing tile intersections

FIXME: This section was written as a theoretical idea for a way of using quadtile indexing. Although we are now using quadtiles, it is not clear whether we are actually indexing tile intersections in this way. Are we just indexing node positions at the current time?

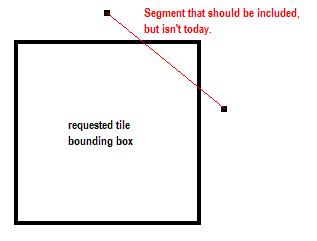

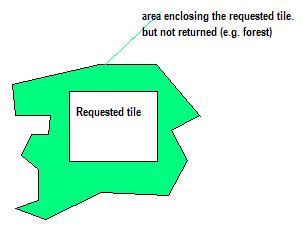

In the current API, you specify a bounding box for an area that you want to view. This then finds the nodes, finds the segments related to those nodes, then any missing nodes (where the other end of the segment lies outside the bounding box). This will unfortunately miss any segment that totally traverses the area, and is even harder to work out for enclosed areas.

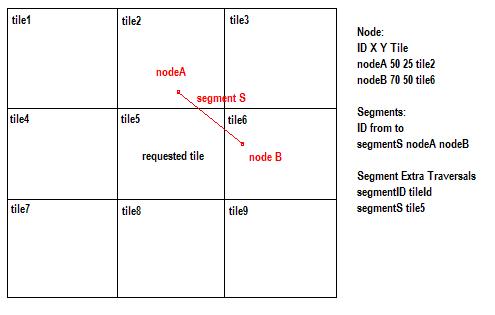

Instead of querying for a bounding box, if we store the 'names' of the tiles that each segment traverses (if it is more than just the source and destination node), we can solve the problem - for example

You should be able to see how this could apply also to areas and ways.

If you are trying to cache pre-rendered versions of these tiles, currently a node could move (and thus a segment), and you would have no idea that your rendering is now totally out of date.

Tilespace users

Say we have a nice map implementation like the slippy map. We could make our tiles match the size of the 32-bit addressable tiles, but, there's a problem - there's rather a lot of addressable tiles - 2^32 of them. And we want several sets of tiles, probably one set for each particular 'zoom' level, maybe with different levels of detail.

Also, looking at our above map, tile CAC is entirely ocean. That's 5000 square km with no data (1/64th of the entire area). We'd like to be able to say 'anything starting with CAC is empty'.

NBB: If we hadn't interleaved the space in a quadtile format, this is much harder (and less efficient). Instead of saying

010001?? ???????? ???????? ???????? = empty

we would have to say

000????? ???????? 101????? ???????? = empty

Thinking about this in terms of, say, a database, the former is selecting on a range of an index, and so offers much better performance. Furthermore, the former partitions the world very simply for the database - several RDBMSes have capabilities to do things like

make all items with this key value >= 000xxxxxxx......... and < 001xxxxxx........ go into data file A

make all items with this key value >= 001xxxxxxx......... and < 010xxxxxx........ go into data file B

etc., etc., etc. This indexing will be coherent with the way queries are being performed.

A nice addition is the fact that, say, the mainland European dataset can be expressed as 'tiles ADB and BCA'. This could potentially ease filtering when doing data extracts from the main OSM database.

Sidenote - Ditch storing the lon,lat values altogether

Some applications could ditch storing the lon,lat values altogether, by adding extra characters to the node quadtile address itself. This is, effectively, a form of fixed point arithmetic. Zoom level 32 (32 deep, requiring 32 characters or 64 bits) would be sufficient to place any node to within 1mm accuracy. For offline applications, this is a traditional space/time tradeoff - you can choose indexes, hashmaps and sets (buckets) in any combination that you like because the tree-space is always, consistently, traversable because left->right is always less->more specific.

Indeed, for some applications (maybe even OSM itself), you could argue for the node address actually being the identifier for the node. If we collapsed segments into simple 'ways with only 2 nodes'. A way is then an ordered list of nodes. A node is a location - but - a 64-bit quadtile identifier is enough to identify a location to within 1cm - so - why bother with node identifiers at all. Simply define ways as

TABLE WAY: WAY_ID LONG PK WAY_INDEX LONG PK NODE INT64 NULL SUB_WAY_ID LONG NULL

Where either you specify a NODE or a SUB_WAY (I.E a way composed of ways). You need to store extra way traversals (see the first section) to a defined level of accuracy (less than 1mm otherwise the table would be *huge*!):

TABLE WAY_TILE_TRAVERSALS: WAY_ID LONG WAY_INDEX LONG TILE INT32

You also need to store some tags, but these become

TABLE NODE_TAGS NODE_ID INT64 PK KEY VARCHAR VALUE VARCHAR

TABLE WAY_TAGS WAY_ID LONG PK KEY VARCHAR VALUE VARCHAR

You could run all of OSM from these 3 tables alone. Example Simplified Database.

Storing in a database - INT32 or char(16)

FIXME: This section was written when quadtile indexing was just an idea. We are now using quadtiles, but it is not clear whether we went with INT32 or char(16), and which of these query syntaxes will actually work on our database

You can store quadtile addresses as binary (INT32) or as a char(16). The former feels like it stores less space, but don't forget, databases usually pack only whole records into pages, so it's not an entirely simple calculation.

We want to be able to query the database at any level of granularity. So, I ought to be able to do a GET, say, on

/api/map?tile=CAC

and OSM to come back with

'yep - there isn't anything there'.

However, if I queried

/api/map?tile=ADB

it might come back with

'Too big, data set size=126000000'

But, looking at the map

/api/map?tile=ADBA and /api/map?tile=ADBC

probably return

'nothing there' as well.

To query for nodes in ADB, if I had stored ADB as binary, anything matching 001110?? ???????? ???????? ????????

So the query would be something like (for INT32s)

SELECT * FROM NODES WHERE QuadTile>= 0x38000000 AND QuadTile < 0x3C000000

Or, for CHAR(16) would be something like

SELECT * FROM NODES WHERE QuadTile LIKE 'ADB%'

Note the very useful query

SELECT COUNT(*) FROM NODES WHERE QuadTile LIKE 'ADB%'

Returning how much information is in an area of the map. Pure index lookup = very fast.

The latter may actually perform significantly faster on some databases, because it tends to partition its index space at the byte level rather than the (2-) bit level. YMMV. It's also simpler SQL.

Tile invalidation data feeds

There should be an RSS feed of tiles that have been updated. This would be someing like

11/9/2006 12:11 ADBAABCBADABCDDA 11/9/2006 12:12 ADBAABCBADABCDDA 11/9/2006 12:13 ADBAABCBAAAACDDE 11/9/2006 13:12 BCACBDEBAAAB

etc., etc. Note that in the last instance, there have 12 characters (24 bits) of precision specified. In this instance, we are saying 'this tile and all it's children have changed' - some heavy editing has occurred in a 13-square km region, and the updates have been collapsed into a single invalidation report.

I may also know the 'tile address' or addresses of regions that I've been editing; it's then reasonably trivial for me to eyeball if a tile change is of interest to me personally, without having to do bit pattern manipulation or long/lat range checking to find out.

How a client might interact with the API

One example might be our aforementioned map tile generator, for which we wish to cache regions of the map that we have already calculated.

You might define a quadtile structure, something like

public class Quadtile {

Quadtile[] children = new Quadtile[4];

Image cachedImage;

public Quadtile getChild(char c)

{

Quadtile nextChild = children[(c - 'A')];

return nextChild;

}

public Quadtile getTile(String address)

{

if( address.length() == 0 )

return this;

Quadtile nextChild = getChild(address.charAt(0));

if( nextChild == null )

return null;

return nextChild.getTile(address.substring(1));

}

public void invalidate(String address)

{

this.cachedImage = null;

Quadtile nextChild = getChild(address.charAt(0));

if( nextChild == null )

return;

return nextChild.invalidate(address.substring(1));

}

}

Then for each RSS feed item, you're just doing

class MyProg

{

Quadtile rootTile;

// .....

void onRssFeedInvalidation(string address)

{

rootTile.invalidate(address);

}

}

You would probably implement a visitor pattern rather than repeating the getTile traversal code, but the principle is the same.