Taginfo/Architecture

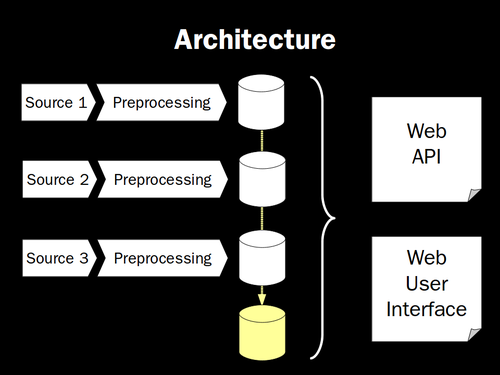

Taginfo collects information from several sources and brings it together in one place. This is done in several steps. There are a few shell scripts to organize all of this (in the sources directory).

In the first step data from external sources is aquired and pre-processed. For each source a script is run that does this. It downloads the data (wiki pages, configuration files from editor code repositories, etc.) and parses it. The result is written to one Sqlite database for each source. Each database contains a table called source with the meta information of this source (name, last updated, ...) and a table called stats containing some important statistics, and depending on the source a few other tables. For each source SQL scripts pre.sql and post.sql are run before and after the import into the database. For most sources the data import is done with a few shell and Ruby scripts only, but for generating the OSM database statistics a C++ program called tagstats is used that can create those statistics much faster and with less memory overhead than any script could do.

To add an additional source, all you have to do is add a few scripts that generate an Sqlite database with its data and make sure its called from update_all.sh. Your scripts don't have to be in Ruby, any language can be used. Have a look at the existing scripts and you'll see how it is done.

In a second step some of the data from all those Sqlite databases is aggregated into an addition database (taginfo-master.db). This includes the content of the source and stats tables mentioned above, a list of languages, popular keys for the tag cloud, and the data used for the suggestions in the search box.

In a further step data is written into the taginfo-search.db database. It uses the FTS3 full text search extension for Sqlite. This data is mostly a copy of the data from other databases but organized in a way to do quick search.

After all databases have been created without error they are moved into the right location so that the web user interface and API have access to it and the taginfo web service is re-started so it opens the new databases. The web service component is written in Ruby using the Sinatra web framework. It basically just accesses the databases created in the import step and displays the right data. All the complex processing has already been done on import.

To allow users access to the collected information, the databases created in the import steps are compressed (using bzip2) and put in a place where they can be downloaded.